A Visual Language for Image Manipulation

24 August 2024

Updated: 23 December 2025

I’ve spent the past few weeks building a concept photo editing application that allows users to visually define and compose shaders to transform an image in ways that are really challenging or impossible in other image manipulation software

For readers of my website it’s probably not too much of a surprise that I’ve been spending a lot of time learning about Parser Combinators as well as Shaders. Armed with this information, it looks like I accidentally created a Visual Programming Language - this post will talk about how that all works

Why

Every time I’ve shown anyone the app the first question was “Why do you need this?”. I think it’s fair to say that no one really needs this. This exists to satisfy the artistic urge to do create something new. I’ve spent so much time feeling limited by the tools I have for working with images are lacking in creative expression. I think there’s an intersection between computational/generative art and photography that’s underserved and it’s a space I would like to play in. I wanted to create a tool that allows creative exploration of images and helps break away from the “filter” based approach that dominates how we edit images

It also seemed like a challenge, it begged to be done.

The App

The high level architecture that makes this all work looks something like this:

- I define different types of functions that can be applied to an image using a shader function written in WebGPU

- The shader is then parsed into a Node that a user can use in the editing workflow

- A user can structure Nodes and Edges into a tree for applying edits

- The tree is compiled into shader code and inputs to the shader (Bindings/Uniforms) are identified

- The final shader is applied to image and is updated when a user modifies any Bindings

Shaders

Shaders are programs that run on the GPU. Due to this fact there are some interesting limitations on how shaders work and what they can do. For working in our application a shader consists of two parts, a Vertex Shader and a Fragment Shader. The Vertex Shader is related to objects that are being rendered in a scene, since we’re rendering an image this isn’t of too much consequence, and for our purposes this always looks the same:

1struct VertexOutput {2 @builtin(position) position: vec4f,3 @location(0) texcoord: vec2f,4};5

6

7@node fn vs(8 @builtin(node_index) nodeIndex : u329) -> VertexOutput {10 const pos = array(11 vec2( 1.0, 1.0),12 vec2( 1.0, -1.0),13 vec2(-1.0, -1.0),14 vec2( 1.0, 1.0),15 vec2(-1.0, -1.0),16 vec2(-1.0, 1.0),17 );18

19 var vsOutput: VertexOutput;20

21 let xy = pos[nodeIndex];22 vsOutput.texcoord = pos[nodeIndex] * vec2f(0.5, 0.5) + vec2f(0.5);23 vsOutput.position = vec4f(pos[nodeIndex], 0, 1);24

25 return vsOutput;26}The data output from the Vertex shader is sent to the Fragment shader. We’ve defined a struct for the data I want to pass called VertexOutput

The Fragment shader is where things get interesting though. This is where I apply transformations to pixel data and it allows us to do stuff to our image. For now I’ve defined the main part of our Fragment shader as follows:

1@group(0) @binding(0) var ourSampler: sampler;2@group(0) @binding(1) var ourTexture: texture_2d<f32>;3

4// BINDINGS_SECTION_START5// BINDINGS_SECTION_END6

7@node fn sample(texcoord: vec2f) -> vec4f {8 return textureSample(ourTexture, ourSampler, texcoord);9}10

11@fragment fn fs(fsInput: VertexOutput) -> @location(0) vec4f {12 var input__result = fsInput;13 // MAIN_SECTION_START14 return textureSample(ourTexture, ourSampler, fsInput__result.texcoord);15 // MAIN_SECTION_END16}In the above I have also defined a function called sample which has the @node decorator - this is important - our app will use this decorator to identify methods that will be available in the UI that can be applied to images. The sample decorator simply gets the color of a pixel in the input for a given coordinate. In fragment shader I need to output a color as denoted by the return type of the fs function

From the perspective of a shader our goal is to provide a library of functions that apply transformations that users can compose to apply an edit to an image, a simple example of this are the splitChannels and combineChannels functions that we can see below:

1struct SplitChannelsOutput {2 r: f32,3 g: f32,4 b: f32,5 a: f32,6};7

8@node fn splitChannels(color: vec4f) -> SplitChannelsOutput {9 var output: SplitChannelsOutput;10

11 output.r = color.r;12 output.g = color.g;13 output.b = color.b;14 output.a = color.a;15

16 return output;17}18

19@node fn combineChannels(r: f32, g: f32, b: f32, a: f32) -> vec4f {20 return vec4f(r, g, b, a);21}The above methods can be composed in interesting ways to do things like swap around the green and blue channels of an image:

1@fragment fn fs(fsInput: VertexOutput) -> @location(0) vec4f {2 var split = splitChannels(color);3 var result = combineChannels(color.r, color.b, color.g, color.a);4 return result;5}So this is the basic concept, a bit of complexity comes in when we consider that it’s possible that a function may want inputs from multiple other functions but this still doesn’t create too much of a hurdle for us as we’ll discuss later

Parsing

I wanted to ensure that the application has a single source of truth for the available functions. In order to do this I wanted to use the shader to inform us as to what functions should be available for a user. In order to do this I had to create a parser for the WebGPU langauge. The parser I built is a little simplified since there are only certain things I care about when parsing the shader. It’s also optimized for maintenance and probably isn’t very fast. My main goal here was to get something working. I wrote the parser using a library called ts-parsec which lets me define parsers using parser combinators.

Defining a parser using this library requires a list of tokens to be defined using regexes. Below are the tokens I currently have defined:

1enum Tok {2 Var = 'Var',3 Let = 'Let',4 Const = 'Const',5 Struct = 'Struct',6 Return = 'Return',7 Fn = 'Fn',8 At = 'At',9 Arrow = 'Arrow',10 Integer = 'Integer',11 Comma = 'Comma',12 Dot = 'Dot',13 Plus = 'Plus',14 Semicolon = 'Semicolon',15 Colon = 'Colon',16 Dash = 'Dash',17 Asterisk = 'Asterisk',18 Slash = 'Slash',19 Equal = 'Equal',20 OpenParen = 'OpenParen',21 CloseParen = 'CloseParen',22 OpenBrace = 'OpenBrace',23 CloseBrace = 'CloseBrace',24 OpenBracket = 'OpenBracket',25 CloseBracket = 'CloseBracket',26 OpenAngle = 'OpenAngle',27 CloseAngle = 'CloseAngle',28 Identifier = 'Identifier',29 Space = 'Space',30 Comment = 'Comment',31}Along with the tokenizer. Each token is configured as [shouldKeepToken, regex, token]

1export const tokenizer = ts.buildLexer([2 [true, /^var/g, Tok.Var],3 [true, /^let/g, Tok.Let],4 [true, /^const/g, Tok.Const],5 [true, /^struct /g, Tok.Struct],6 [true, /^fn/g, Tok.Fn],7 [true, /^return/g, Tok.Return],8 [true, /^->/g, Tok.Arrow],9 [true, /^@/g, Tok.At],10 [true, /^,/g, Tok.Comma],11 [true, /^\./g, Tok.Dot],12 [true, /^\+/g, Tok.Plus],13 [true, /^\;/g, Tok.Semicolon],14 [true, /^\:/g, Tok.Colon],15 [true, /^\-/g, Tok.Dash],16 [true, /^\*/g, Tok.Asterisk],17 [true, /^\//g, Tok.Slash],18 [true, /^\=/g, Tok.Equal],19 [true, /^\(/g, Tok.OpenParen],20 [true, /^\)/g, Tok.CloseParen],21 [true, /^\{/g, Tok.OpenBrace],22 [true, /^\}/g, Tok.CloseBrace],23 [true, /^\[/g, Tok.OpenBracket],24 [true, /^\]/g, Tok.CloseBracket],25 [true, /^\</g, Tok.OpenAngle],26 [true, /^\>/g, Tok.CloseAngle],27

28 [true, /^\d+?/g, Tok.Integer],29 [true, /^[A-Za-z]+([A-Za-z0-9_]*)/g, Tok.Identifier],30 [false, /^\s+/g, Tok.Space],31 [false, /^\/\/ .*/g, Tok.Comment],32])You can see that I throw away comments and spaces since I don’t care about these for now

A relatively simple parser that I have is

All parsers in my application are defined using classes to just help keep things a little tidy. One of the simpler parsers I have is the one that parses integers and is defined as follows:

1export class Integer {2 constructor(public value: number) {}3

4 static parser = ts.apply(ts.tok(Tok.Integer), (t) => new Integer(+t.text))5}This consists of a constructor for defining the members and a static parser function which defines a parser for the current class. We can compose these parsers to build more complex ones, for example the way I parse Generic uses Integer for an optional length that a generic may have, for example array<f32, 3>:

1export class Generic {2 constructor(3 public type: TypeDeclaration,4 public length?: Integer,5 ) {}6

7 static parser = ts.apply(8 ts.kmid(9 ts.tok(Tok.OpenAngle),10 ts.seq(11 TypeDeclaration.parser,12 ts.opt(ts.kright(ts.tok(Tok.Comma), Integer.parser)),13 ),14 ts.tok(Tok.CloseAngle),15 ),16 (matches) => new Generic(...matches),17 )18}The composition of these parses results in a tree looking something like this:

1TypeDeclaration {2 "generic": Generic {3 "length": Integer {4 "value": 5,5 },6 "type": TypeDeclaration {7 "generic": undefined,8 "identifier": Identifier {9 "name": "i32",10 },11 },12 },13 "identifier": Identifier {14 "name": "array",15 },16}At a high level, we can apply this to the entire file to get a syntax tree for the whole file, but as you can imagine that starts to get much bigger

The UI

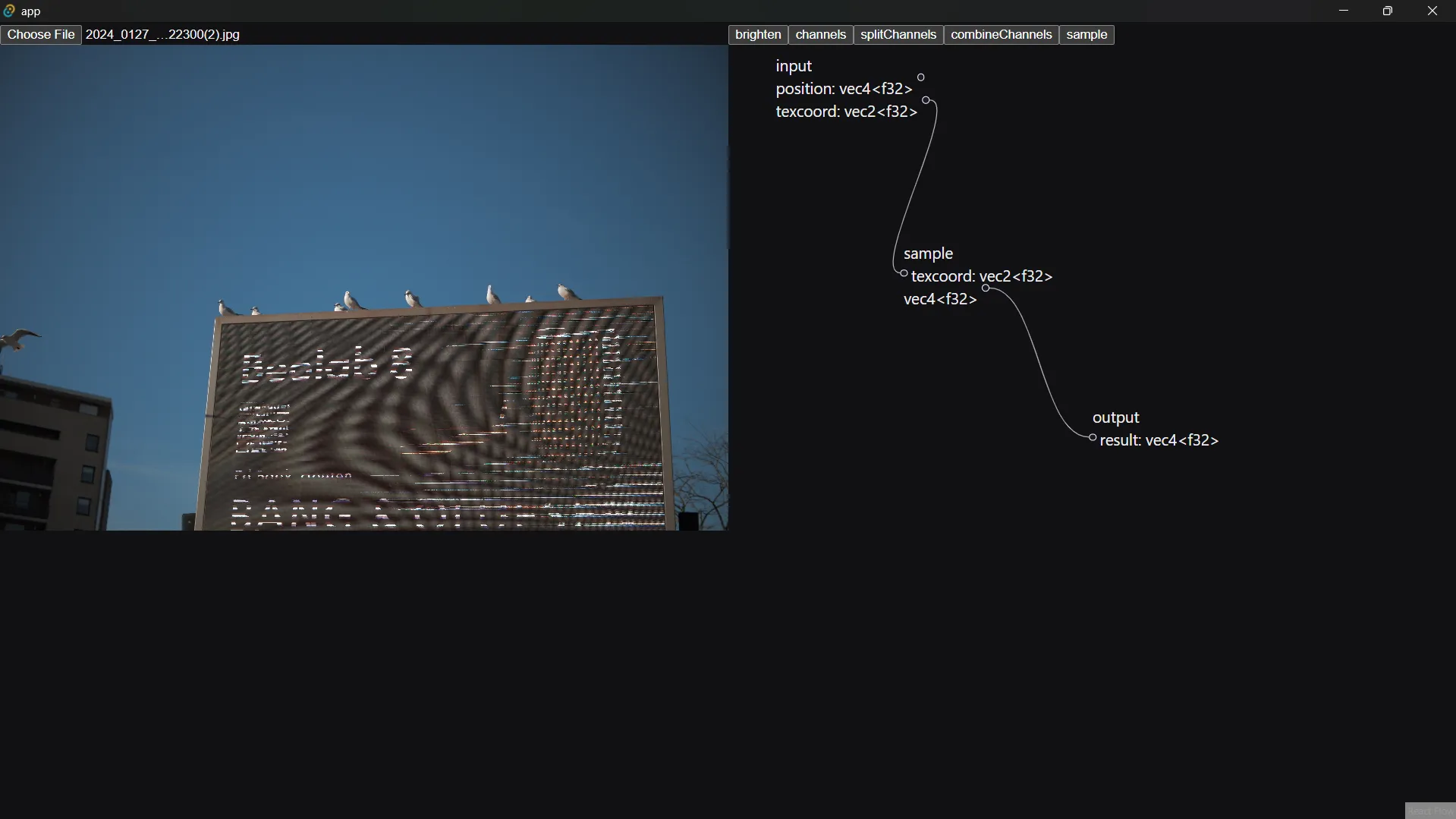

Now, since I’m able to parse the file, I can extract the functions from it and use that to define the different things a user can do in the UI. A simple example of some UI that uses the sample function defined previously can be seen below:

In this we are able to define Nodes that have certain inputs and outputs which can be connected to each other. Each node is a function call and each edge refers to using the result of one function as an input to another function. When defining connections it’s important to verify that connections are of the same type and don’t create a cycle in any way - while fiddly, the code to do this isn’t particularly interesting

This step is actually relatively simple, the complexity comes into converting this back to the WebGPU code.

Compiling

This tree structure effectively represents a Dependency Graph where all functions depend on their inputs, and the final output node depends on any previous edges. The goal in this step is to build a tree out of the nodes and edges and then sort this in a way that defines the order that different operations need to be applied to obtain the output value

The first step to this is compiling a list of nodes and edges into a tree. I’ve done this using the following:

1interface ReadonlyOrderedMap<T> extends ReadonlyMap<Id, T> {2 orderedValues(): ReadonlyArray<T>3 orderedKeys(): ReadonlyArray<Id>4}5

6/**7 * Wraps the builtin `Map` with an easy way to get keys and values as an array8 * sorted by the original insertion order9 *10 * @see MDN: [Map is insertion-order aware](https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/Map)11 */12class OrderedMap<T> extends Map<Id, T> implements ReadonlyOrderedMap<T> {13 orderedValues() {14 return Array.from(this.values())15 }16

17 orderedKeys() {18 return Array.from(this.keys())19 }20}21

22export function buildTree<T extends Node, E extends Edge>(23 nodes: T[],24 edges: E[],25): OrderedMap<Connected<T, E>> {26 const map = new OrderedMap<Connected<T, E>>()27

28 for (const node of nodes) {29 map.set(node.id, { ...node, connections: [] })30 }31

32 for (const edge of edges) {33 const from = map.get(edge.from)34 const to = map.get(edge.to)35

36 if (from && to) {37 from.connections.push([edge, to])38 }39 }40

41 return map42}This helps us go from the list of connections to a tree that represents the relationships between the nodes - this looks pretty much the same as the UI from earlier

Next, we need to sort the nodes in an order that ensures that each node comes after any nodes that it depends on, this is called a Topographic Sort and I’ve loosely implemented it as follows:

1/**2 * Does a depth-first search on the input vertices relative to the given ID.3 *4 * @returns a map of the nodes reachable from5 */6export function topologicalSort<T extends Vertex, E extends Edge>(7 vertices: ReadonlyMap<Id, Connected<T, E>>,8 relative: Id,9) {10 const visited = new OrderedMap<Connected<T, E>>()11

12 const rec = (at: Connected<T, E>) => {13 if (visited.has(at.id)) return14

15 for (const [_, child] of at.connections) rec(child)16

17 // Insert self after all children have been inserted to maintain ordering18 visited.set(at.id, at)19 }20

21 const initial = vertices.get(relative)22 if (!initial) {23 console.error('Relative node not in tree', relative, vertices)24 throw new Error('Relative node not provided')25 }26

27 rec(initial)28 return visited29}Using this function, I can transform the earlier example for sampling an image into a list that looks something like [input, sample, output]

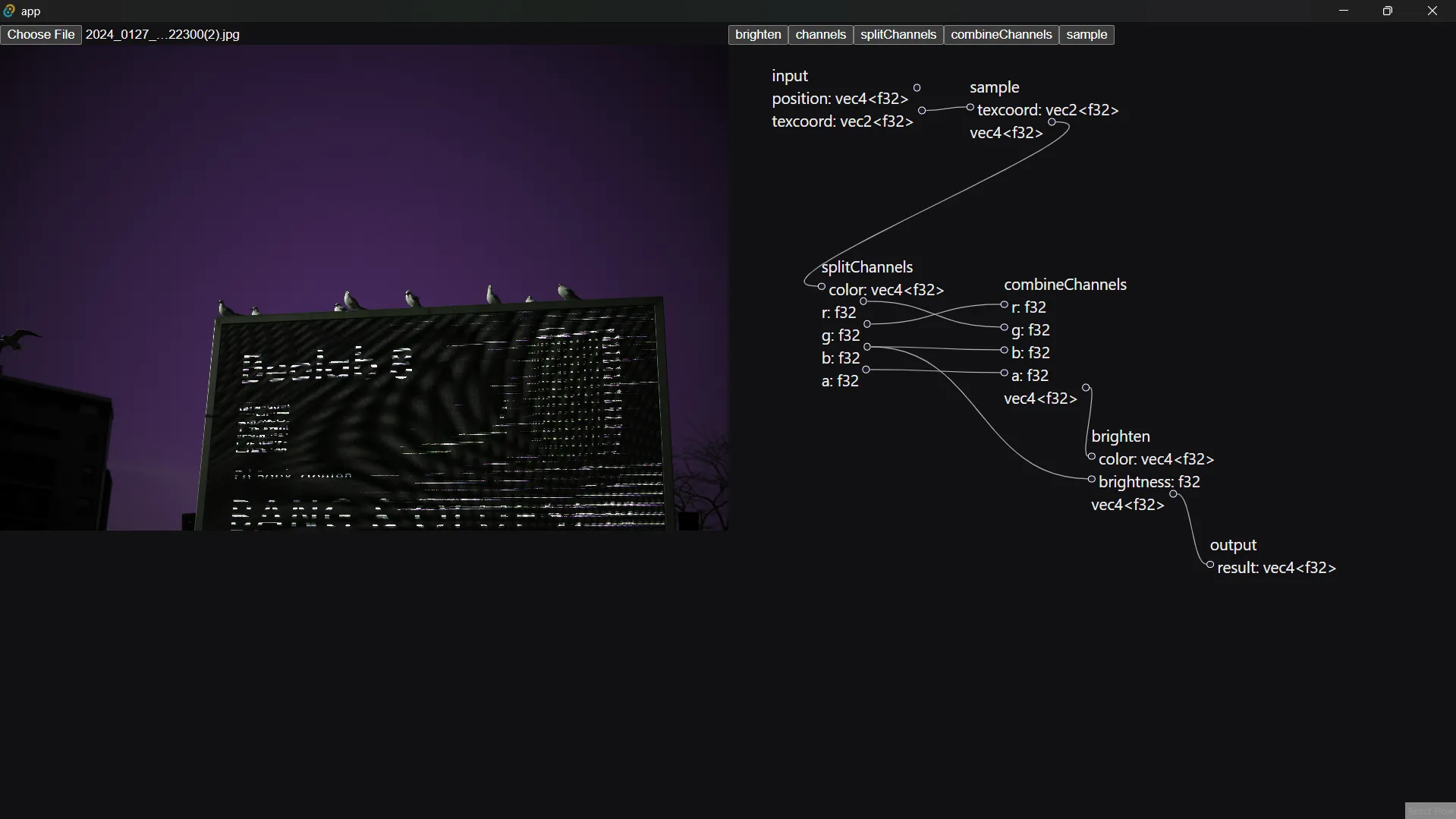

What’s nice is that this will also work with a more complicated set of connections like so:

Can be compiled into [input, sample, splitChannels, combineChannels, brighten, output]. It’s worth taking a moment to let this idea sink in because this is the core idea that allows us to compile the UI based language into a shader

Once we are able to order our nodes, it’s a simple matter of iterating through them in order and determining which inputs are needed and which functions these nodes refer to, the output of this looks something like:

1@fragment fn fs(fsInput: VertexOutput) -> @location(0) vec4f {2 var value1 = sample(input.texcoord);3 var value2 = splitChannels(value1);4 var value3 = combineChannels(value2.g, value2.r, value2.b, value2.a);5 var value4 = brighten(value3, value2.b);6 return value4;7}User Input

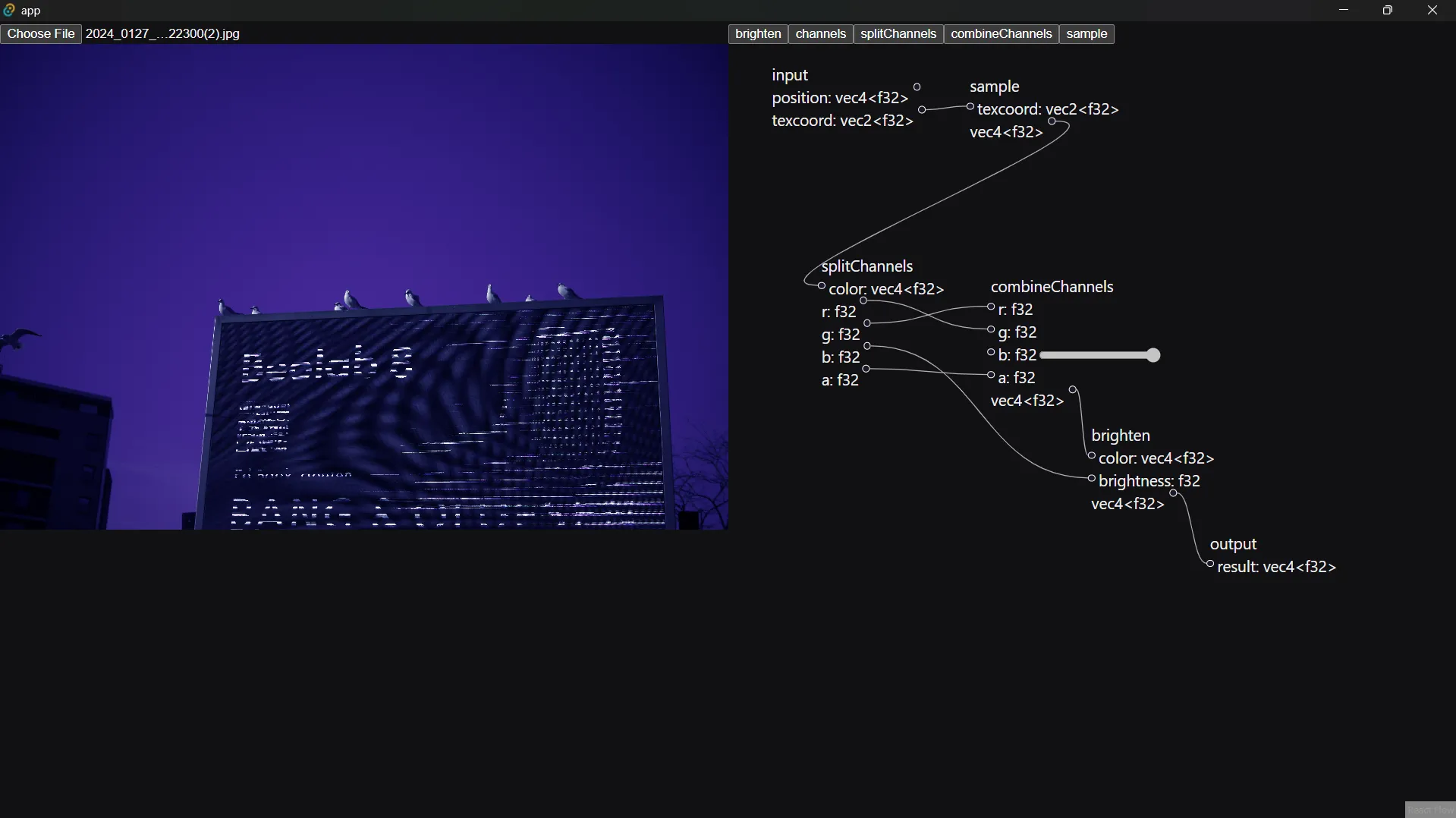

So, it’s all well and good that I can compile a shader, but as I have it right now a user can’t really customize what any of these values do. It would be nice if I could allow users to provide some input to the shader. For this we will use a uniform which is basically an input to the GPU. Uniforms are nice because we can change them without having to recompile the shader

For my purpose, I can infer that any input to a node that is not specified is meant to be set by the user, we can depict this using some kind of input field for that value, in the example below we use a range input for a f32 value, this can be set by the user:

Then, when compiling our nodes, we can extract this to a binding, which would look something like this:

1@group(1) @binding(0) var<uniform> combineChannelsInputB: f32;And that can be used in the fragment shader as follows:

1@fragment fn fs(fsInput: VertexOutput) -> @location(0) vec4f {2 var value1 = sample(input.texcoord);3 var value2 = splitChannels(value1);4 var value3 = combineChannels(value2.g, value2.r, combineChannelsInputB, value2.a);5 var value4 = brighten(value3, value2.b);6 return value4;7}Now, actually passing this value to the shader is a bit messy but really comes down to using the WebGPU API. This effectively involves creating a bindGroup adding buffers which hold the value of the data

1const dynamicBuffers: Record<BindingId, GPUBuffer> = {}2

3const entries: GPUBindGroupEntry[] = []4for (const { id, bindings } of shader.compiled.compiledNodes) {5 for (const binding of bindings) {6 const buffer = device.createBuffer({7 size: binding.size,8 usage: GPUBufferUsage.UNIFORM | GPUBufferUsage.COPY_DST,9 label: 'dynamic binding for: ' + binding.declaration,10 })11

12 buffer.unmap()13

14 entries.push({15 binding: binding.binding,16 resource: {17 buffer,18 },19 })20

21 dynamicBuffers[`${id}.${binding.name}`] = buffer22 }23}24

25const dynamicBindGroup =26 entries.length &&27 device.createBindGroup({28 label: 'dynamic bind group',29 layout: pipeline.getBindGroupLayout(1),30 entries,31 })Whenever the user changes some input the buffer values can be updates like so:

1for (const [groupId, group] of Object.entries(data || {})) {2 for (const [bindingId, value] of Object.entries(group || {})) {3 const buffer = dynamicBuffers[`${groupId}.${bindingId}`]4 if (buffer) {5 device.queue.writeBuffer(buffer, 0, value)6 }7 }8}Now, there are some finicky bits involved in assembling all the data here but these are the important bits around using the API

Closing

As you can see there are quite a few moving pieces but I feel like the approach I’ve taken here fits the problem relatively well. As things are defined now, all app functionality is driven by what is available in the shader and the different nodes can be inferred and combined however the user wants

This isn’t the end though. There’s a lot more to do, and a lot of art to be made

References

The most important resource in making this work is defintely the WebGPU Fundamentals Site. The UI is inspired by Blender’s Node Editor and is implemented using React Flow

For the purpose of implementation, I’ve also looked at loads of different things, of notable value were the following: